Introduction

Amazon Web Services (AWS) offers a variety of cloud services that enable developers to build scalable and robust applications. One such service is Amazon Simple Storage Service (Amazon S3), which allows you to store and retrieve any amount of data at any time. In many applications, you might need to upload files to AWS S3 and subsequently convert those files to a different format, such as Base64, for various purposes like data transfer or storage in a database.

In this blog, we’ll walk you through the process of uploading a document to Amazon Web Services (AWS) and converting the uploaded file to a Base64 string. This guide will cover the essential steps, including setting up an Amazon S3 bucket, configuring your .NET Web API, and converting the stored file to Base64. By the end of this tutorial, you’ll have a comprehensive understanding of the process and be able to implement it in your projects.

How to Store File in Amazon S3:

Create an AWS Account: If you don’t have an AWS account, you need to create one. Visit the AWS Management Console to get started.

Set Up an S3 Bucket:

1. Go to the S3 service in the AWS Management Console.

2. Click on “Create bucket”.

3. Provide a unique name for your bucket and choose the appropriate region.

4. Configure bucket settings as needed and complete the creation process.

Generate Access Keys:

1. Navigate to the IAM (Identity and Access Management) service.

2. Create a new user with programmatic access.

3. Attach the necessary policies to grant access to your S3 bucket.

4. Save the Access Key ID and Secret Access Key, as you’ll need them for your .NET application.

Steps to Upload Image into Amazon S3 Bucket through .NET Web API

Setting Up Your .NET Project:

1. Create a new .NET Web API project using your preferred method (e.g., Visual Studio or CLI).

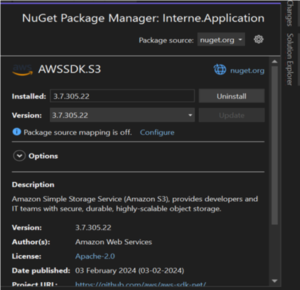

2. Install the AWS SDK for .NET using NuGet Package Manager.

AWSSDK.S3

Configuring AWS Credentials

To upload File into Amazon S3 we need to create bucket and get following credentials

- Amazon Access Key

- Security Key

A bucket is essentially a container where you can store objects (files, data, images, videos, etc.) within Amazon S3. Each object resides in a specific bucket.

Adding Configuration to App Settings:

“BucketName”: “BucketName”,

“AccessKey”: “Your Amazon Access Key”,

“SecurityKey”: “Your Security Key”,

“BaseUrl”: “Your Base URL”

}

This is not recommended approach

In a production environment, hardcoding sensitive information can pose significant security risks to your application. To mitigate these risks, it’s essential to use services like AWS Secrets Manager or other vault storage solutions to securely store and manage sensitive keys and credentials.

1. Create AmazonS3 class

public class AmazonS3

{

public string AccessKey { get; set; }

public string SecurityKey { get; set; }

public string BucketName { get; set;}

public string BaseUrl { get; set;}

}

public class Program

{

builder.Services.Configure<AmazonS3>(builder.Configuration.GetSection(“AmazonS3”));

builder.Services.AddScoped<AmazonDocumentUploadService, AmazonDocumentUploadService> ();

}

public Amazon;

public Amazon.S3;

public Amazon.S3.Model;

public Microsoft.AspNetCore.Http;

public Microsoft.Extensions.Configuration;

public Microsoft.Extensions.Options;

public class AmazonDocumentUploadService

{

private readonly AmazonS3 amazonS3;

public AmazonDocumentUploadService(IOptions<AmazonS3> amamzonS3)

{

this.amazonS3 = amamzonS3.Value;

}

// Method to upload a document to Amazon S3

public async Task UploadDocument(IFormFile document)

{

if (document == null || document.Length == 0)

{

return new Response {

IsSuccess = 0,

Message = “No file was uploaded.”,

HttpStatusCode = 400

};

}

try

{

// Check if document is not null and has content

if(document!= null && document.Length > 0)

{

//Initialize path URL

string pathURL = “”;

//Generate Document Name

string docName =!String.IsNullOrEmpty(document.FileName)?

(UnixTimeStampHelper.DateTimeToUnixTimestamp(DateTime.Now) + document.FileName.Trim()).Replace(” “, “_”): “”;

// Initialize S3 client

using (IAmazonS3 client = new AmazonS3Client(amazonS3.AccessKey, amazonS3.SecurityKey,

RegionEndpoint.APSouth1)) // To Specify region end point you need to check with your AWS setting where your bucket is located

{

// Create a PutObjectRequest to upload the document

var request = new PutObjectRequest

{

// Set the bucket name

BucketName = amazonS3.BucketName,

// Set the object key

Key = string.Format(“{0}/ {1}”, DateTime.Now.ToString(“MMMM”).ToLower(), docName)

};

// Create a memory stream

using (var ms = new MemoryStream())

{

// Copy document content to memory stream

await document.CopyToAsync(ms);

request.InputStream = ms;

// Upload the document to S3

await client.PutObjectAsync(request);

}

// Get the key of the uploaded document

pathURL = request.Key;

}

// Return success response with document URL

return new Response() { IsSuccess=1, Message= “Document Uploaded Successfully”, HttpStatusCode=201, Result= new { URL =(amazonS3.BaseUrl +pathURL) } };

}

else

{

// Return error response if no file was uploaded

return new Response() { IsSuccess=0, Message= “No file was uploaded.”,HttpStatusCode=400};

}

}

catch (Exception ex)

{

// Log the exception or handle it as per your application’s requirement

// Return error response if an exception occurs during upload

return new Response() { IsSuccess=0, Message= $” Error uploading document to S3: {ex.Message}”,HttpStatusCode=500};

}

}

}

Use this to create unique name for your file:

namespace Interne.Application.Helpers

{

public class UnixTimeStampHelper

{

public static DateTime UnixTimeStampToDateTime(double unixTimeStamp)

{

DateTime dateTime = new DateTime(1970, 1, 1, 0, 0, 0, 0, DateTimeKind.Utc);

dateTime = dateTime.AddSeconds(unixTimeStamp).ToUniversalTime();

return dateTime;

}

public static double DateTimeToUnixTimestamp(DateTime dateTime)

{

return (TimeZoneInfo.ConvertTimeToUtc(dateTime) –

new DateTime(1970, 1, 1, 0, 0, 0, 0, System.DateTimeKind.Utc)).TotalSeconds;

}

}

}

2. Code to convert file stored in AWS to base 64 using Key :

Create this method in AmazonDocumentUploadService file

public async Task GetDocumentBase64(string key) //This is the Aws Generated Key of file

{

// Replace with your desired region

using (var client = new AmazonS3Client(amazonS3.AccessKey, amazonS3.SecurityKey, RegionEndpoint.APSouth1))

{

GetObjectRequest request = new GetObjectRequest

{

BucketName = amazonS3.BucketName,

Key = key

};

using (GetObjectResponse response = await client.GetObjectAsync(request))

using (MemoryStream memoryStream = new MemoryStream())

{

await response.ResponseStream.CopyToAsync(memoryStream);

byte[] fileBytes = memoryStream.ToArray();

return Convert.ToBase64String(fileBytes);

}

}

}

using AmazonS3.Model;

using Microsoft.AspNetCore.Mvc;

namespace AmazonS3.Controllers

{

[ApiController]

[Route(“[controller]”)]

public class DocumentUploadController: ControllerBase

{

private readonly AmazonDocumentUploadService _uploadService;

public DocumentUploadController (AmazonDocumentUploadService uploadService)

{

_uploadService = uploadService;

}

[HttpPost(“upload/document”)]

public async Task UploadDocument( IFormFile document)

{

if (document == null || document.Length == 0)

{

return BadRequest(“No file uploaded.”);

}

try

{

Response response=new Response ();

// upload Document

response= await _uploadService.UploadDocument(document);

// Get Base64 of uploaded file

response.Result.Base64 = await

_uploadService.GetDocumentBase64(response.Result.URL);

return Ok(response);

}

catch (Exception ex)

{

return StatusCode(500, $”Internal server error: {ex.Message}”);

}

}

}

}

Code Summary: The provided code creates a new controller with an HTTP POST method that accepts a file. It then utilizes the AWS SDK’s AmazonS3Client to upload the file to the designated S3 bucket.

Converting an Uploaded File to Base64

After uploading a file to AWS S3, one common approach is to retrieve the file as a byte array and then convert it into a Base64 string. This method resolves several issues that arise when working with files across different platforms and environments, such as:

- Cross-platform Compatibility: Base64 encoding ensures the file can be transferred between systems that may handle binary data inconsistently, ensuring uniformity across different platforms.

- Client-Side Application Compatibility: Many client-side technologies, like JavaScript in web browsers, can easily handle Base64 strings, making it simpler to process and display files without dealing with raw binary data.

- Avoiding Direct Exposure of S3 URLs: By converting the file into Base64 and embedding it in the response, you can prevent the direct exposure of S3 URLs, adding a layer of security, particularly for sensitive files.

Alternatives to Base64 Conversion

Although Base64 encoding addresses these issues, there are more efficient alternatives that can achieve similar results without the overhead of encoding:

- Pre-signed URLs: AWS S3 provides pre-signed URLs, which offer secure, temporary access to files without requiring encoding. This avoids the increased file size that comes with Base64 and allows direct binary transfers.

- Multipart Uploads/Downloads: APIs supporting multipart form-data allow for the direct transfer of binary files, eliminating the need to convert files into Base64. This method is more efficient, especially for large files.

- Streaming: For large files, streaming data in chunks can improve performance by reducing memory usage. This method avoids the need to load the entire file into memory or encode it in Base64.

Conclusion

We’ve learned about Amazon S3, how to upload a document file to Amazon S3 Bucket, and convert file base64 using key. Amazon S3 offers a reliable and cost-effective solution for storing and managing data in the cloud. By following the outlined steps and leveraging the provided code, developers can seamlessly integrate Amazon S3 into their applications, enabling efficient storage.

Share this post

About the Author

Harsh Thakur

(Associate Software Engineer)